UTF-8: the network standard

People who read websites in English, or e-mails in Japanese are not just fluent in these languages, they have probably also had a successful UTF-8 experience. “UTF-8” is the abbreviation for “8-Bit UCS Transformation Format” and is the most widely used character encoding method on the internet. The international Unicode standard covers all language characters and text elements of (almost) all languages in the world for EDP processing. UTF-8 plays an important role in the Unicode character set.

The development of UTF-8 coding

UTF-8 is a form of character encoding. It assigns one specific bit sequence to each existing Unicode character, which can then be read as a binary number. This means: UFT-8 assigns a fixed binary number to all letters, numbers, and symbols of an e-growing number of languages. International organizations that want to establish internet standards and consider them important, are working on making UTF-8 the indisputable standard for coding. Among others, the W3C and the Internet Engineering Task Force are also committed to this task. In fact, most websites in the world were already using UTF-8 encoding in 2009. In March 2018, according to a W3Techs report, 90.9% of all existing websites already use this method of character encoding.

Problems with implementing UTF-8

Different regions with related languages and writing systems have each developed their own coding standards because they have different requirements. For example, in the English-speaking world, the code ASCII, whose structure permits assigning 128 characters to a computer-readable character string, was enough. However, Asian fonts or the Cyrillic alphabet use more unique single characters. German umlauts (like the letter ä) are also missing from ASCII. Additionally, assignments of different codes can duplicate. For example, a document written in Russian was displayed on an American computer with the Latin letters assigned on this system instead of Cyrillic letters. The resulting gibberish makes international communication considerably more difficult.

Origins of UTF-8

To solve this problem, Joseph D. Becker developed the Unicode character set for Xerox between 1988 and 1991. From 1992, the IT industry consortium X/Open was also looking for a system that would replace ASCII and expand the character repertoire. Nevertheless, the coding should have remained compatible with ASCII. This requirement was not met by the first encoding called UCS-2, which simply translated the character numbers into 16-bit values. UTF-1 also failed because the Unicode assignments ended up partially colliding with the existing ASCII character assignments. A server, which was set to ASCII, sometimes output incorrect characters. This was a major problem, since the majority of English-speaking computers were working with it at the time. The next step was Dave Prosser’s “File System Safe UCS Transformation Format” (FSS-UTF), which eliminated the ASCII character overlap.

In August of the same year, the draft was inspected by experts. At Bell Labs, known for their numerous Nobel Prize winners, Unix co-founders Ken Thompson and Rob Pike worked on the Plan 9 operating system. They took Prosser’s idea, developed a self-synchronizing coding method (each character indicates how many bits it needs), and defined rules assigning letters that could be displayed differently in the code (example: “ä” as a separate character or “a+¨”). They successfully used the coding for their operating system and introduced it to others. Now known as “UTF-8”, FSS-UTF was essentially completed.

UTF-8 is an 8-bit character encoding for Unicode. The abbreviation of “UTF-8” stands for “8-Bit Universal Character Set Transformation Format.” One to four bytes, consisting of eight bits each, result in a computer-readable binary number. This assigns the coding to a language character or other text element. The self-synchronizing structure and the potential to generate 221 binary numbers allows the unmistakable assignment of every single language and text element for all languages in this world.

UTF-8 as a Unicode character set: one standard for all languages

UTF-8 encoding is a transformation format within the Unicode standard. The international standard ISO 10646 defines Unicode in large parts under the name “Universal Coded Character Set.” The Unicode developers limit certain parameters for practical use, which is intended to ensure the globally uniform, compatible coding of characters and text elements. When it was introduced in 1991, Unicode defined 24 modern writing systems and currency symbols for data processing. In June 2017, there were 139.

There are various Unicode transformation formats (UTF for short), which reproduce the 1.114.112 possible code points. Three formats have been established: UTF-8, UTF-16, and UTF-32. Other encodings, like UTF-7 or SCSU, also have their advantages, but have not been able to establish themselves.

Unicode is divided into 17 levels, each of which contains 65,536 characters. Each level consists of 16 columns and 16 rows. The first level, the “Basic Multilingual Plane” (level 0) comprises a large part of the writing systems currently in use around the world, as well as punctuation marks, control characters, and symbols. Five more levels are currently also in use:

- “Supplementary Multilingual Plane” (level 1): historical writing systems, rarely used characters

- “Supplementary Ideographic Plane” (level 2): rare CJK characters (Chinese, Japanese, Korean)

- “Supplementary Special Purpose Plane” (level 14): individual check characters

- “Supplementary Private Use Area – A” (level 15): private use

- “Supplementary Private Use Area – B” (level 16): private use

The UTF encodings allow access to all Unicode characters. The respective properties are recommended for certain areas of application.

The alternatives: UTF-32 and UTF-16

UTF-32 always works using 32 bits, i.e. 4 bytes. The simple structure increases the format’s readability. With languages that mainly use the Latin alphabet and so only the first 128 characters, the encoding takes up much more memory than necessary (4 bytes instead of 1).

UTF-16 has established itself as a display format in operating systems like Apple macOS and Microsoft Windows, and is also used in many software development frameworks. It is one of the oldest UTFs still in use. Its structure is particularly suitable for the space-saving coding of non-Latin speech characters. Most characters can be represented in 2 bytes (16 bits), with just rare characters doubling in length to 4 bytes.

Efficient and scalable: UTF-8

UTF-8 consists of up to four bit chains, each consisting of 8 bits. It’s predecessor ASCII, on the other hand, consists of a bit chain with 7 bits. Both codings define the first 128 coded characters congruently. The characters, which mainly originate in the English-speaking world, are then each covered by one byte. For languages with a Latin alphabet, this format uses storage space most efficiently. The operating systems Unix and Linux use it internally. However, UTF-8 plays its most important role when it comes to internet applications, namely in the representation of text on the internet or in e-mails.

Thanks to the self-synchronizing structure, readability is maintained despite the variable length per character. Without Unicode restrictions, (= 4.398.046.511.104) character assignments would be possible using UTF-8, since the 4-byte limit in Unicode is effectively 221, which is more than sufficient. The Unicode area even has empty layers for many other writing systems. The exact assignment prevents code point overlaps that restricted communication in the past. While UTF-16 and UTF-32 also allow exact mapping, UFT-8 uses the storage space in the Latin writing system in a particularly efficient way, and is designed to allow different writing systems to coexist and be covered without problems. This allows simultaneous, meaningful display within a text field, without any compatibility problems.

Basics: UTF-8 encoding and composition

UTF-8 encoding is reverse compatible with ASCII and has a self-synchronizing structure, which makes it easier for developers to identify sources of errors afterwards. UTF just uses 1 byte for all ASCII characters. The total number of bit chains is indicated by the first few digits of the binary number. Since the ASCII code only contains 7 bits, the first digit is the code number 0. 0 fills the memory space to a full byte and signals the start of a chain that doesn’t have subsequent chains. For example, the name “UTF-8” would be expressed as a binary number with UTF-8 encoding as follows:

| Characters | U | T | F | - | 8 |

|---|---|---|---|---|---|

| UTF-8, binary | 01010101 | 01010100 | 01000110 | 00101101 | 00111000 |

| Unicode Point, hexadecimal | U+0055 | U+0054 | U+0046 | U+002D | U+0038 |

ASCII characters like those used in the above table assign UTF-8 encoding to a single bit string. All following characters and symbols within the Unicode have two to four 8-bit strings. The first chain is called start byte; additional chains are sequence bytes. Start bytes with consecutive bytes always start with 11. Consecutive bytes always start with 10. If you search manually for a specific point in the code, you will be able to recognize the beginning of an encoded character by the 0 and 11 markers. The first printable multi-byte character is the inverted exclamation mark:

| Characters | ¡ |

|---|---|

| UTF-8, binary | 11000010 10100001 |

| Unicode Point, hexadecimal | U+00A1 |

The prefix code prevents an additional character from being encoded within a byte string. If a byte stream starts in the middle of a document, the computer still displays the readable characters correctly, because it does not display incomplete characters at all. If you search for the beginning of a character, the 4-byte limit means that you must go back a maximum of three byte strings at any point to find the start byte.

Another structuring element: the number of ones at the beginning of the start byte indicates the length of the byte string. 110xxxxx stands for 2 bytes, as shown above. 1110xxxx stands for 3 bytes, 11119xxx for 4 bytes. In Unicode, the assigned byte value corresponds to the character number, which enables a lexical order. But there are gaps. The Unicode range U+007F to U+009F includes assigned control numbers. The UTF-8 standard does not assign printable characters there, just commands.

The UTF-8 encoding can theoretically string up to eight byte chains together. But Unicode requires a maximum length of 4 bytes. One consequence of this is that byte strings with 5 bytes or more are invalid by default. On the other hand, this limitation reflects the effort to represent code as compact – i.e. with little storage space load – and as structured as possible. A basic rule when using UTF-8 is that the shortest possible coding should always be used. For example, the letter ä is encoded by 2 bytes: 11000011 10100100. Theoretically it is possible to combine the code points for the letter a (01100001) and the diary sign ¨ (11001100 10001000) to represent ä: 01100001 11001100 10001000. However, this form is considered to be overlong coding for UTF-8, making it inadmissible.

This rule is the reason that the byte sequence beginning with 192 and 193 are not allowed. This is because they potentially represent characters in the ASCII range (0-127) with 2 bytes, which are already encoded with 1 byte.

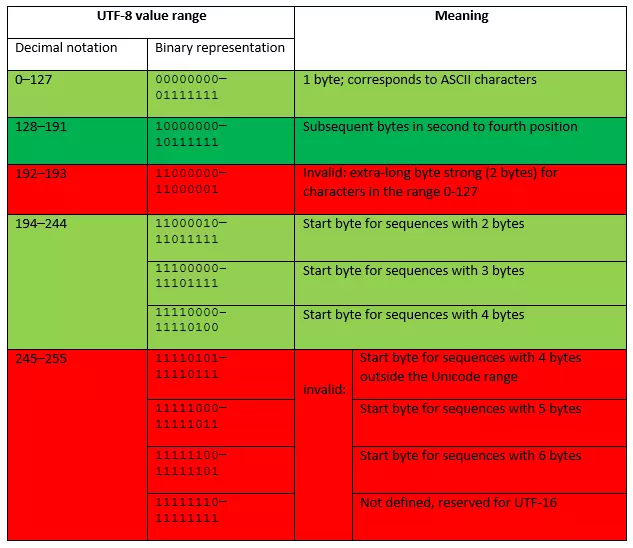

Some Unicode value ranges were not defined for UTF-8 because they are available for UTF-16 surrogates. The overview shows which bytes are allowed in UTF-8 under Unicode according to the Internet Engineering Task Force (IETF) green marked areas are valid bytes; red marked ones are invalid).

Converting from Unicode hexadecimal to UTF-8 binary

Computers only read binary numbers; humans use a decimal system. An interface between these forms is the hexadecimal system. It helps to make long bit chains compact. It uses the numbers 0 to 9 and the letters A to F and acts based on the number 16. As the fourth power of 2, the hexadecimal system is better suited to represent eight-digit byte ranges than the decimal system. A hexadecimal digit stands for a nibble with the octet. A byte with eight binary digits can then only be represented with two hexadecimal digits. Unicode uses the hexadecimal system to describe the position of a character within its own system. The UTF-8 code can then be calculated from this binary number.

First, the binary number must be converted from the hexadecimal number. Then the code points are fitted into the structure of the UTF-8 encoding. To simplify structuring, use the following overview, which shows how many code points fit into a byte chain and whose structure you can expect in the Unicode value range.

| Size in bytes | Determining free bits | First Unicode code point | Last Unicode code point | Start byte / Byte 1 | Sequence byte 2 | Sequence byte 3 | Sequence byte 4 |

|---|---|---|---|---|---|---|---|

| 1 | 7 | U+0000 | U+007F | 0xxxxxxx | |||

| 2 | 11 | U+0080 | U+07FF | 110xxxxx | 10xxxxxx | ||

| 3 | 16 | U+0800 | U+FFFF | 1110xxxx | 10xxxxxx | 10xxxxxx | |

| 4 | 21 | U+10000 | U+10FFFF | 11110xxx | 10xxxxxx | 10xxxxxx | 10xxxxxx |

Within a code range, you can assume the number of bytes being used, since the lexical order is observed when numbering the Unicode code points and UTF-8 binary numbers. Within the range, U+0800 and U+FFFF UTF-8 uses 3 bytes. 16 bits are available for expressing the code point of a symbol. A calculated binary number is placed in the UTF-8 scheme from right to left, filing any blanks on the left with zeros.

Sample calculation:

The symbolᅢ(Hangul Junseong, Ä) is a Unicode instead of U+1162. To calculate the binary number, convert the hexadecimal number to a decimal number first. Each digit in the number corresponds to the correlating power of 16, with the right digit having the lowest value of 160 = 1. Starting from the right, multiply the numerical value of the digit by that of power, then add up the results.

| 2 | * | 1 | = | 2 |

|---|---|---|---|---|

| 6 | * | 16 | = | 96 |

| 1 | * | 256 | = | 256 |

| 1 | * | 4096 | = | 4096 |

| 4450 | 4450 | 4450 | 4450 | 4450 |

4450 is the calculated decimal number. Now convert it to a binary number. To do this, divide the number by 2 until the result is 0. The rest is the binary number, written from right to left.

| 4450 | : 2 | = | 2225 | Rest: | 0 |

|---|---|---|---|---|---|

| 2225 | : 2 | = | 1112 | Rest: | 1 |

| 1112 | : 2 | = | 556 | Rest: | 0 |

| 556 | : 2 | = | 278 | Rest: | 0 |

| 278 | : 2 | = | 139 | Rest: | 0 |

| 139 | : 2 | = | 69 | Rest: | 1 |

| 69 | : 2 | = | 34 | Rest: | 1 |

| 34 | : 2 | = | 17 | Rest: | 0 |

| 17 | : 2 | = | 8 | Rest: | 1 |

| 8 | : 2 | = | 4 | Rest: | 0 |

| 4 | : 2 | = | 2 | Rest: | 0 |

| 2 | : 2 | = | 1 | Rest: | 0 |

| 1 | : 2 | = | 0 | Rest: | 1 |

| Result: 1000101100010 | Result: 1000101100010 | Result: 1000101100010 | Result: 1000101100010 | Result: 1000101100010 | Result: 1000101100010 |

| hexadecimal | binary |

|---|---|

| 0 | 0000 |

| 1 | 0001 |

| 2 | 0010 |

| 3 | 0011 |

| 4 | 0100 |

| 5 | 0101 |

| 6 | 0110 |

| 7 | 0111 |

| 8 | 1000 |

| 9 | 1001 |

| A | 1010 |

| B | 1011 |

| C | 1100 |

| D | 1101 |

| E | 1110 |

| F | 1111 |

In this detailed example, you can follow the calculation steps exactly. If you want to convert hexadecimal numbers into binary numbers quickly and easily, just replace each hex digit with the corresponding four binary digits.

For the Unicode point U+1162, join the digits as follows:

x1 = b0001

x1 = b0001

x6 = b0110

x2 = b0010

x1162 = b0001000101100010

The UTF-8 code prescribes 3 bytes for the U+1162 code point, because the code point lies between U+0800 and U+FFFF. The byte starts with 1110, the two following bytes start with 10, and the binary number is added from the right to left in the free bits that do not specify the structure. Add 0 to other bits in the start byte until the octet is full. The UTF-8 encoding looks like this:

11100001 10000101 10100010 (the inserted code point is bold)

| Symbols | Unicode code point, hexadecimal | Decimal number | Binary number | UTF-8 |

|---|---|---|---|---|

| ᅢ | U+1162 | 4450 | 1000101100010 | 11100001 10000101 10100010 |

UTF-8 in editors

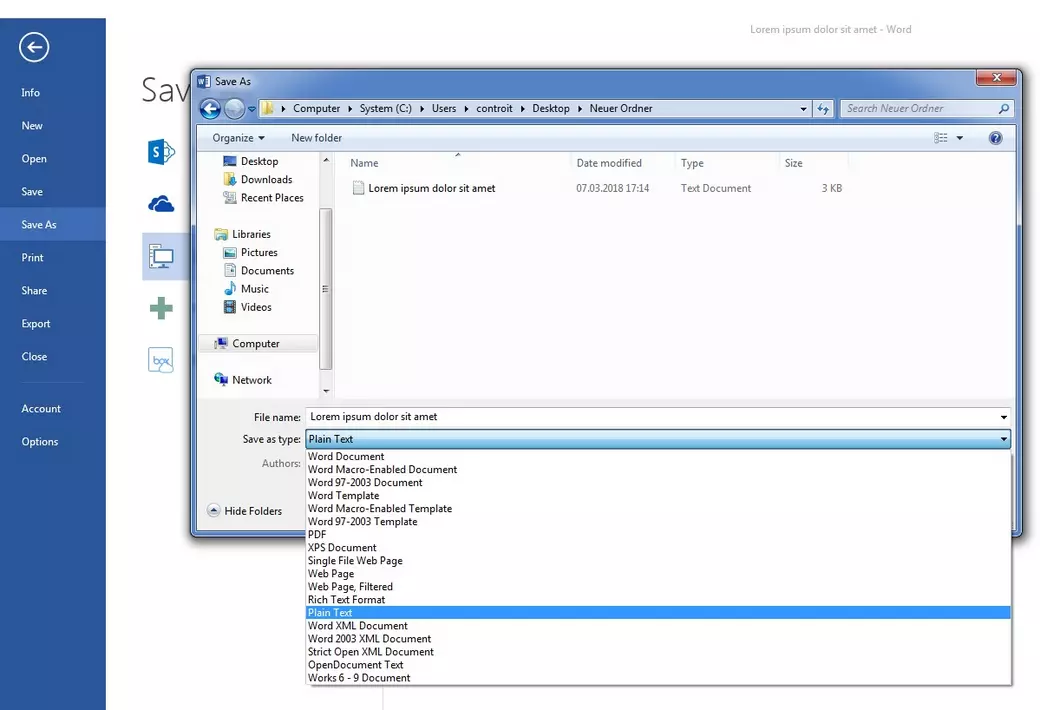

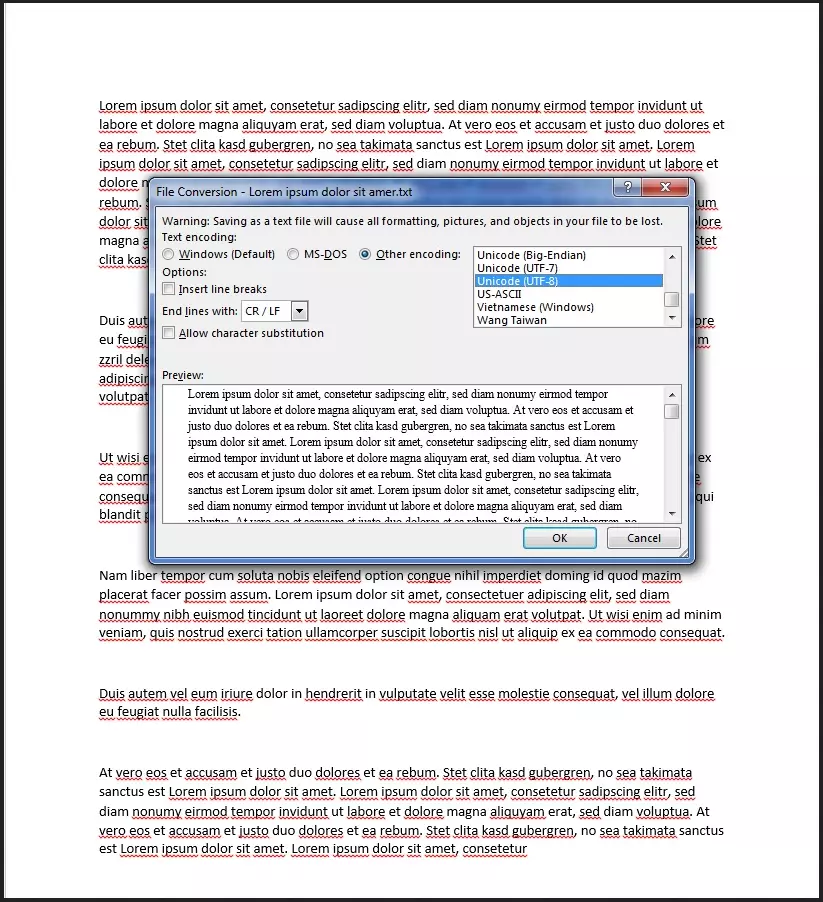

UTF-8 is the most widely used standard on the internet, but simple text editors do not necessarily save texts in this format by default. For example, by default Microsoft Notepad uses an encoding called “ANSI” If you want to convert a text file from Microsoft Word to UTF-8 (for example, to map different writing systems), proceed as follows: Go to “Save as” and select the option “Text only” under File type.

The pop-up window “File conversion” opens. Under “Text Encoding,” select “Other Encoding” and then “Unicode (UTF-8)” from the list. Select “Carriage return/cell feed” or “CR/LF” from the “End lines with” drop-down menu. Converting a file to the Unicode character set with UTF-8 is that simple.

If you open an unchecked file and you don’t know what kind of encoding it contains, problems might happen while you’re editing. With Unicode, the “Byte Order Mark (BOM)” is used when this happens. This invisible character indicates whether the document is in a big-endian or little-endian format. If a program decodes a file in UTF-16 little-endian using UTF-16 big-endian, the text will be incorrectly output. Documents based on the UTF-8 character set do not have this problem, since the byte order is always read as a big-endian byte sequence. In this case, the BOM merely shows that this document is UTF-8 encoded.

Characters represented by more than one byte may have the most significant byte in some encodings (UTF-16 and UTF-32) at the front (left) or rear (right) position. If the highest significant byte (“Most Significant Byte”, MSB) is at the front, the encoding gets the addition “big-endian,” whereas if the MSB is at the back, “little-endian” is added.

You can place the BOM before a data stream, or at the beginning of a file. The selection takes precedence over all other statements, including the HTTP header. The BOM serves as a kind of signature for Unicode encodings and has the code point U+FEFF. Depending on which coding is used, the BOM is displayed differently in the coded form.

| Coding format | BOM, code point: U+FEFF (hex.) |

|---|---|

| UTF-8 | EF BB BF |

| UTF-16 big-endian | FE FF |

| UTF-16 little-endian | FF FE |

| UTF-32 big-endian | 00 00 FE FF |

| UTF-32 little-endian | FF FE 00 00 |

You do not use the BOM if the protocol explicitly prohibits it, or if your data is already assigned to a particular type. Some programs expect ASCII characters depending on the protocol. Since UTF-8 is reverse compatible with ASCII encoding, and its byte order is fixed, you do not need a BOM. In fact, Unicode recommends not using BOM with UTF-8. However, since a BOM can occur in older code and can cause problems, it is important to identify the BOM as such.

Conclusion: UTF-8 encoding improves international communication

UTF-8 has many advantages – not just that the encoding is reverse compatible with ASCII. Due to its variable byte sequence length and the enormous number of possible code points, it can represent an extremely large number of different writing systems. The storage space for this is used efficiently.

The introduction of uniform standards like Unicode facilitates international communication. Thanks to the use of self-synchronizing byte sequences, UTF-8 encoding is characterized by a low error rate in character representation. Even if an encoding or transmission error occurs, you can find the beginning of each character just by determining the byte in the sequence that start with 0 or 11. UTF-8 has become the undisputed coding standard for websites with a usage rate of more than 90 percent.