What are recurrent neural networks?

Recurrent neural networks use feedback to create a kind of memory. They are primarily used when working with sequential data and require a relatively high level of training.

What is a recurrent neural network?

A recurrent neural network (RNN) is an artificial neural network that can create feedback and thus feed output information back in as input. The method is used frequently in processing sequential data for deep learning and AI. In taking in information from previous inputs, the recurrent neural network develops a kind of memory and changes the output based on previous elements in the sequence. There are different types of feedback, which provide increased possibilities but also require more training.

- Get online faster with AI tools

- Fast-track growth with AI marketing

- Save time, maximize results

How do recurrent neural networks work?

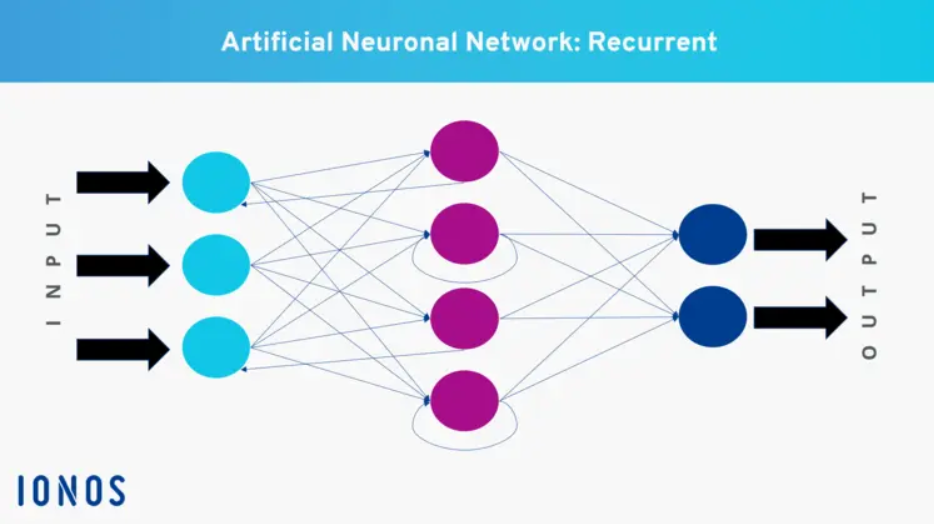

At first glance, recurrent neural networks are built like other neural networks. They consist of at least three different layers, that in turn contain neurons (nodes), which are connected to each other. There is an input layer, an output layer and any number of hidden layers.

- Input layer: This layer is where information from the outside world is received. That information is then weighted and passed on to the next layer.

- Hidden layer(s): The information is passed on and weighted again in the hidden layer. That happens continuously as the information moves through the layers. It takes place hidden from view, which is where the layer gets its name from.

- Output layer: The weighted and modified information is then output. That output is either the end result or serves as the input for other neurons.

What makes recurrent neural networks special is the ability to determine the direction of information flow yourself. Thanks to the feedback, a neuron’s output doesn’t necessarily need to get sent to the next higher layer. It can also be used as input for the same neuron, a neuron in the same layer or a neuron in a previous layer. The weighting parameter is the same in each layer. Feedback is divided into four different categories:

- Direct feedback: The output is used as input for the same neuron.

- Indirect feedback: The output is linked to a previous layer.

- Lateral feedback: The output is connected to the input of another neuron in the same layer.

- Full feedback: All output from neurons is connected to other neurons.

How are recurrent neural networks trained?

Training recurrent neural networks is very complicated, since information from the distant past cannot be sufficiently taken into account, if at all. Functions like Long Short-Term Memory (LSTM) are often used to solve that problem, allowing information to be stored for longer. With the right training, recurrent neural networks can distinguish important from unimportant information and feed the former back into the network.

What kinds of architectures are there?

There are various kinds of architectures for RNNs:

- One to many: The RNN receives one input and returns multiple outputs. An example is an image caption, where a sentence is generated from a keyword.

- Many to one: Several inputs are assigned to a single output. This type of architecture is used in sentiment analysis, for example, where feedback is predicted based on references.

- Many to many: Several inputs are used to get several outputs. There don’t have to be the same number of inputs and outputs. This type is used when translating one language into another.

What are the most important applications of recurrent neural networks?

Recurrent neural networks are especially useful when context is important for information processing. This is especially true for sequential data, which have a set order or temporal sequence. In those cases, RNNs can do a good job of predicting a result based on the context of previous inputs. That makes them suitable for areas like the following:

- Forecasts and time series data: RNNs are often used to predict events or values based on historical data, as in financial market analysis, weather forecasts and energy use.

- Natural language processing (NLP): RNNs are especially useful in natural language processing. They’re used for machine translation, language modeling, text recognition and automatic text generation.

- Speech recognition: RNNs play a key role in speech recognition technologies, where they’re used to convert spoken language into text. That technology is used in digital assistants and automatic transcription services.

- Image and video analysis: While RNNs are primarily used for processing sequential data, they can also be used in image and video analysis. That’s especially true when it comes to processing image sequences and videos, as is done for video summaries and detecting activity on security cameras.

What is the difference between RNNs and feedforward neural networks?

Recurrent neural networks are a step further than feedforward neural networks (FNN), which don’t allow feedback. In FNNs, information flows in only one direction, to the next highest layer. Inputting information backwards is thus not possible. Although FNNs can recognize patterns, they cannot access information that has already been processed.