What is Apache Spark and how does it work?

In comparison to its predecessors such as Hadoop or competitors like PySpark, Apache Spark excels thanks to its impressively quick performance. This is one of the most important aspects when querying, processing and analyzing large amounts of data. As a big-data and in-memory analytics framework Spark offers many benefits for data analysis, machine learning, data streaming and SQL.

What is Apache Spark?

Apache Spark, the data analysis framework from Berkeley is one of the most popular big-data platforms worldwide and is a “top-level project” for the Apache Software Foundation. The analytics engine is used to process large amounts of data and analyze data at the same time in distributed computer clusters. Spark was developed to meet the demands of big data in regard to computing speeds, expandability and scalability.

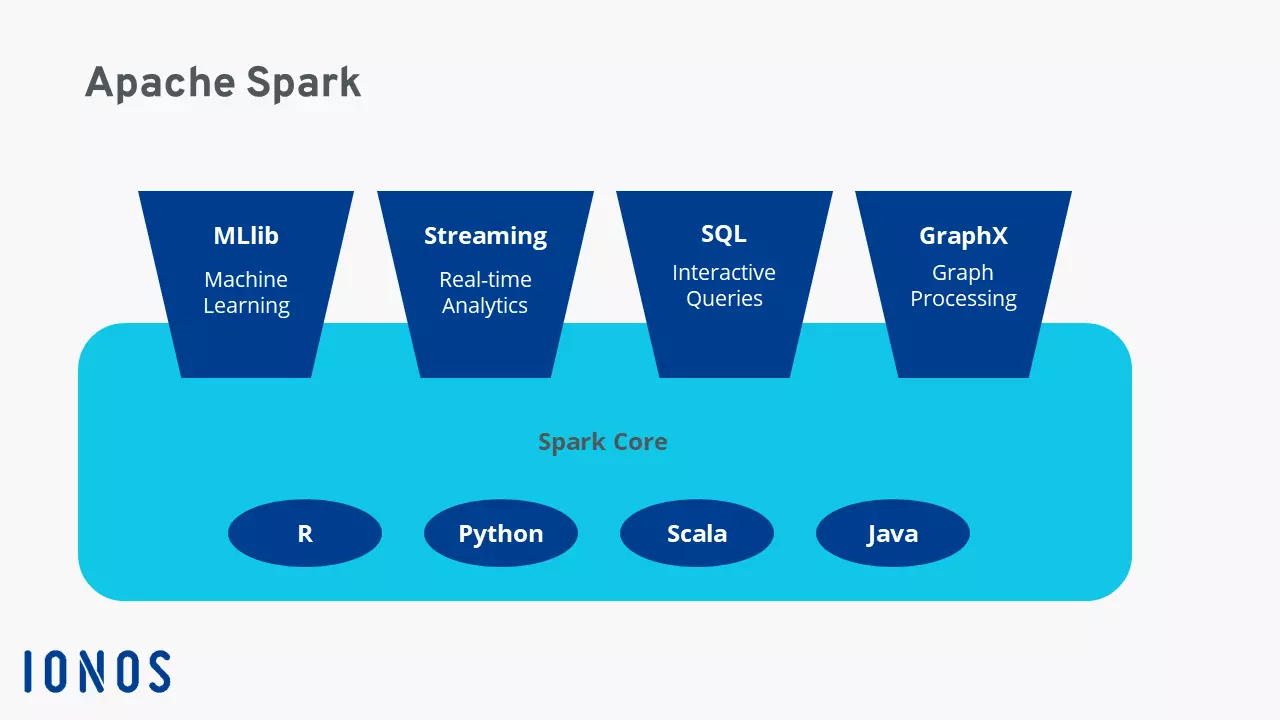

It has integrated modules which are beneficial for cloud computing, machine learning, AI applications as well as streaming and graphical data. Due to its power and scalability the engine is used by large companies such as Netflix, Yahoo and eBay.

What makes Apache Spark special?

Apache Spark is a much quicker and more powerful engine than Apache Hadoop or Apache Hive. It processes tasks 100-times quicker compared to Hadoop if the processing is carried out in the memory and ten-times faster if using the hard drive. This means that Spark gives companies improved performance which at the same time reduces costs.

One of the most interesting things about Spark is its flexibility. The engine can be run not only as a standalone option, but also in Hadoop clusters run by YARN. It also allows developers to write applications for Spark in different programming languages. It’s not only SQL which can be used, but also Python, Scala, R or Java.

There are other characteristics which make Spark special, for example, it doesn’t need to use the Hadoop file system and it can also be run on other data platforms such as AWS S3, Apache Cassandra or HBase. Furthermore, when specifying the data source, it processes both batch processes, which is the case with Hadoop, as well as stream data and different workloads with almost identical code. With an interactive query process, you can distribute and process current and historic real time data as well as run multilayer analysis on the hard drive and memory.

How does Spark work?

The way Spark works is based on the hierarchical, primary-secondary principal (previously known as the master-slave model. To do this the Spark driver serves as a master node managed by the cluster manager. This in turn manages the slave nodes and forwards data analysis to the client. The distribution and monitoring of the executions and queries is carried out by SparkContext, created by the Spark driver. It cooperates with the cluster managers on how they offer Spark, Yarn, Hadoo or Kubernetes. This in turn creates resilient distributed datasets (RDDs).

Spark sets what resources are used to query or save data or where queried data should be sent. By **dynamically processing the engine data directly in the memory of server clusters it reduces latency and offers very fast performance. In addition, parallel workflows are used together with the use of virtual as well as physical memory.

Apache Spark also processes data from different data storages. Among these you’ll find the Hadoop Distributed File System (HDFS) and relational data storages such as Hive or NoSQL databases. On top of this there is the performance-increasing in-memory or hard-disk processing. Which one depends on the size of the corresponding datasets.

RDDs as a distributed, error-proof dataset

Resilient distributed datasets are important in Apache Spark to process structured or unstructured data. These are error-tolerant data aggregations, which Spark distributes using clustering on server clusters and processes them at the same time or moves them to data storage. It’s also possible to forward them to other analysis models. In RDDs, datasets are separated into logical partitions which are opened, newly created or processed as well as calculated with transformations and actions.

With Linux hosting from IONOS you can use your databases as you need to. It’s flexibly scalable, has SSL and DDoS protection as well as secure servers.

DataFrames und Datasets

Other data types processed by Spark are known as DataFrames and Datasets. DataFrames are APIs set up as data tables in rows and columns. On the other hand, Datasets are an extension to DataFrames for an object-oriented user interface for programming. By far, DataFrames play a key role in particular when used with the Machine Learning Library (MLlib) as an API with a unique structure across programming languages.

Which language does Spark use?

Spark was developed using Scala, which is also the primary language for the Spark Core engine. In addition, Spark also has connectors to Java and Python. Python offers many benefits for effective data analysis in particular for data science and data engineering with Spark in connection with other programming languages. Spark also supports high-level interfaces for the data science language R, which is used for large datasets and machine learning.

When is Spark used?

Spark is suitable for many different industries thanks to its varied library and data storage, the many programming languages which are compatible with APIs as well as the effective in-memory processing. If you need to process, query or calculate large and complicated amounts of data, thanks to its speed, scalability and flexibility, Spark is a great solution for businesses, especially when it comes to big data. Spark is particularly popular in online marketing and e-commerce businesses as well as financial companies to evaluate financial data or for investment models as well as simulations, artificial intelligence and trend forecasting.

Spark is primarily used for the following reasons:

- The processing, integration and collection of datasets from different sources and applications

- The interactive querying and analysis of big data

- The evaluation of data streams in real time

- Machine learning and artificial intelligence

- Large ETL processes

Benefit from dedicated servers with Intel or AMD processors and give your IT team a break with managed servers from IONOS.

Important components and libraries in the Spark architecture

Among the most important elements of the Spark architecture include:

Spark Core

Spark Core is the basis of the entire Spark system and makes the core Spark features available as well as managing the task distribution, data abstraction, use planning and the input and output processes. Spark Core uses RDDs distributed across multiple server clusters and computers as its data structure. It’s also the basis for Spark SQL, libraries, Spark Streaming and all other important individual components.

Spark SQL

Spark SQL is a particularly well used library, which can be used with RRDs as SQL queries. For this, Spark SQL generates temporary DataFrame tables. You can use Spark SQL to access various data sources, work with structured data as well as use data queries via SQL and other DataFrame APIs. What’s more, Spark SQL allows you to connect to the HiveQL database language to access a managed data warehouse using Hive.

Spark Streaming

This high-level API function allows you to use highly scalable, error-proof data streaming functions and continually process or create data streams in real time. Spark generates individual packages for data actions from these streams. You can also employ trained machine learning modules in the data streams.

MLIB Machine Learning Library

This scalable Spark library has machine learning code to use expanded, statistical processes in server clusters or to develop analysis applications. They include common learning algorithms such as clustering, regression, classification and recommendation, workflow services, model evaluations, linear distributed statistics and algebra or feature transformations. You can use MLlib to effectively scale and simplify machine learning.

GraphX

The Spark API GraphX works to calculate graphs and combines ETL, interactive graph processing and explorative analysis.

How did Apache Spark come about?

Apache Spark was developed in 2009 at the University of California, Berkeley as part of the AMPlabs framework. Since 2010, it’s been available for free under an open-source license. Further development and optimization of Spark started in 2013 by the Apache Software Foundation. The popularity and potential of the big data framework ensured that Spark was named as a “top level project” by AFS in February 2014. In May 2014, Spark version 1.0 was published. Currently (as of April 2023) Spark is running version 3.3.2.

The aim of Spark was to accelerate queries and tasks in Hadoop systems. With the Spark Core basis, it allows task transfer, entry and output functionalities as well as in-memory processing which far in a way outperform the common Hadoop framework MapReduce through its distributed functions.

What are the pros of Apache Spark?

To quickly query and process large data amounts Spark offers the following benefits:

- Speed: Workloads can be processed and executed up to 100-times faster compared to Hadoop’s MapReduce. Other performance benefits come from support for batch and stream data processing, directed cyclical graphs, a physical execution engine as well as query optimization.

- Scalability: With in-memory processing of data distributed on clusters, Spark offers flexible, needs-based resource scalability.

- Uniformity: Spark works as a complete big-data framework which combines different features and libraries in one application. Among these include SQL queries, DataFrames, Spark Streaming, MLlib for machine learning and Graph X for graph processing. This also includes a connection to HiveQL.

- User friendliness: Thanks to the user-friendly API interfaces to different data sources as well as over 80 common operators to develop applications, Spark connects multiple application options in one framework. It’s particularly useful when using Scala, Python, R or SQL shells to write services.

- Open-source framework: With its open-source design, Spark offers an active, global community made up of experts who continuously develop Spark, close security gaps and quickly push improvements.

- Increase in efficiency and cost reductions: Since you don’t need physical high-end server structures to use Spark, when it comes to big-data analysis, the platform is a cost-reducing and powerful feature. This is especially true for computer-intensive machine learning algorithms and complex parallel data processes.

What are the cons of Apache Spark?

Despite all of its strengths, Spark also has some disadvantages. One of those is the fact that Spark doesn’t have an integrated storage engine and therefore relies on many distributed components. Furthermore, due to the in-memory processing, you need a lot of RAM which can cause a lack of resources affecting performance. What’s more if you use Spark, it takes a long time to get used to it, to understand the background processes when installing your own Apache web server or another cloud structure using Spark.