Load balancing

Countless individuals use the internet daily to do research or go on virtual shopping sprees. Whatever their reasons may be, busy traffic puts a burden on the web server, which is responsible for keeping online stores, business sites, or other information portals up and running. Service providers especially rely on web applications that deliver quick and secure transactions. For this reason, the availability of a website plays a crucial role in converting site visitors into customers.

Servers can become particularly damaging to companies when they impede or, in worst case scenarios, knock out business activities. An effective, albeit cost intensive, way of countering such business blunders is to invest in state-of-the art server technology. For those unable to incur such costs, efficiently distributing data traffic, also known as load balancing, proves to be an interesting alternative for many. This method not only intercepts traffic during peak times, it also increases connection reliability. By directing server access among equally distributed resources, a more stable access speed is achieved.

What is load balancing?

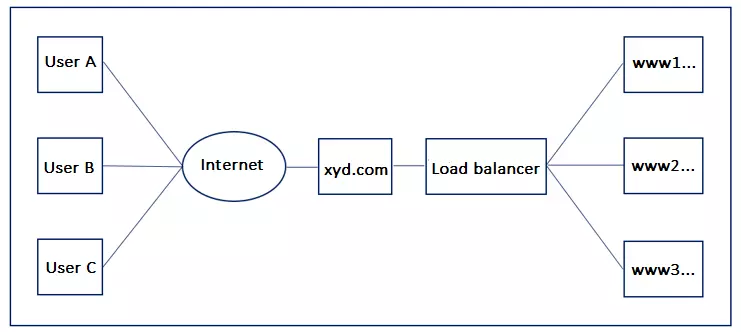

Normally, every domain is assigned to a web server. Data from that server is requested when an internet user initiates access to a website by entering a URL into the browser search bar. If the server is overloaded, users receive error reports in the form of a HTTP status code revealing that the website cannot be displayed. This is where load balancing comes into play. Located upstream in the server hierarchy, load balancers make it possible for domains to be allocated to multiple servers without encountering address-related problems. Load balancers are addressed under their public domains.

Subordinate servers, or downstream servers, are designated as www1, www2, www3, etc. This practice allows load balancing to make a website available under the same URL, although these are distributed among different servers. As a result, the chances of server overload are diminished by directing outside requests to different physical computers within a given cluster. And due to the complex algorithms responsible for such distributions, visitors remain mostly unaware of the distribution of their requests.

Load balancing can also play a role aside from web servers: even for computers with multiple processors. In cases like these, the load balancer ensures that the requirements are evenly distributed across the various processors in order to generate more computing power.

Load balancing is particularly popular in server technology and describes a procedure in which requests are distributed to different servers in the background – without users noticing. The load balancer used for this purpose can be implemented as hardware or software.

How does load balancing work?

Requests to a web server, e.g. in the form of page views, initially run on the load balancer. This then takes over the load distribution by forwarding the access attempts to different servers. The load balancer itself can be implemented as hardware or software, but the principle remains the same: a request reaches the load balancer and, depending on the method used, the device or software forwards the data to the relevant server.

The technical basis for this is the DNS process: the user only accesses a website via a URL. With the help of DNS (Domain Name System), this is turned into an IP address, which in turn links to the load balancer. Ideally, the user will not notice that the request has been forwarded to the server.

Popular methods for load balancing

How incoming requests are distributed depends on the choice of algorithm. Popular standard algorithms for load balancing are: Round Robin, Weighted Round Robin, Least Connections, and Weighted Least Connections.

Round Robin

Round Robin describes a procedure whereby incoming server requests are processed in a queue by the load balancer and distributed throughout a series of servers. Each new request is assigned to the next server in the sequence. As a result, access requests can be evenly spread across the load balancing cluster. No matter how urgent the request or severity of the server load, Round Robin treats all processes the same. Load balancers functioning in this manner are especially ideal for situations that equally provide server clusters with the same resources. Round Robin provides a simple and effective distribution method for cases that satisfy this criteria. Server systems using Round Robin algorithms can be at a disadvantage when confronted with significant gaps in capacity strength; less robust servers may be burdened with subsequent processes before completing the initial one. Server congestion may result.

Weighted Round Robin

Round Robin can fall short when heterogeneous server clusters are at play, but this can be accounted for by using a weighted Round Robin distribution scheme. Incoming requests are distributed to individual servers by using static weight functions. The weighting is predetermined by the administrator. High server strength can be assigned a value of 100, with less efficient servers receiving a value of 50, for example. Operating under such a construction means that a weighted server with a score of 100 can receive two requests from the load balancer in a single round. The less-robust weighted server with a value of 50, on the other hand, can only be allocated one request. Weighted Round Robins should only be used for load balancing schemes in which server clusters have varying resources at their disposal.

Least Connections

When it comes to serially distributing server requests through the load balancer, Round Robin algorithms do not factor in how many connections subordinate servers have to support over a certain time frame. This means that several connections can accumulate on one server in the cluster. The result is that the server becomes overloaded, although it is managing fewer connection requests than other ones. The Least Connections algorithm tackles this problem by distributing requests according to the existing connections of the respective server. Load balancers allocate requests to servers with the smallest amount of active connections. This load balancing method is recommended for homogenous server clusters, which have all been equipped with comparable resources. Answering delays may result in alternate cases.

Weighted Least Connections

Server clusters featuring varying performance capacities should employ load balancing based on the weighted distribution of existing connections instead of a Least Connections algorithm. With this method, both administrator-defined weighted functions as well as the active connections of the server are accounted for. Resulting from this set-up is a more evenly rounded distribution of request traffic within the server cluster. New requests from the load balancer are automatically allocated to the server weighting with the smallest workload at the given moment.

The advantages of a balanced load balancing scheme

Access time can be noticeably shortened by distributing traffic across multiple servers, even when processing multiple requests simultaneously. Since the traffic of a slower server is automatically directed to other servers in the cluster, load balancing provides higher security against server shutdowns. Unavailable servers do not hinder the website’s availability. Load balancing also simplifies the maintenance of server systems.

Configurations and updates can be carried out during operations without any noticeable loss of performance. Load balancers are able to recognize the server maintenance status and redirect respective requests, making load balancing a very flexible hosting solution.

Practical problems when using load balancing

The e-commerce sector in particular often has problems with load balancing. An example: the website visitor fills their shopping cart with items they would like to buy. These articles remain saved for the duration of a session, regardless of which page the user accesses within the online marketplace. A normal load balancer, however, would distribute the requests to different servers. This would mean that the contents of the shopping cart would be lost.

There are two possible approaches to solving this problem. On the one hand, the load balancer could react to the user’s IP address. Then, for example, the distributor would always push requests from the same IP address onto the same server. Another method would be to read a session ID from the request itself to determine to which server the request must be sent.

Why are load balancers so important?

If you earn your money on the internet, your business can’t afford for the server to be down for any amount of time. And if you only use one server and it fails due to overload, your website will no longer be accessible to potential customers. This leads to several problems: firstly, you cannot generate revenue during the downtime. Services can’t be booked and purchases can’t be made. Customers begin to lose trust in the company. A non-functional website is the same as a deserted store; it doesn’t make a good impression. It is twice as bad for users who experience server overload during the ordering process. They are then uncertain as to whether the order has actually been placed or not.

But even if you don’t offer a service directly via the internet, your website should always be accessible. The internet has become one of the most important channels for accessing information. If a potential customer is looking for details about your business online and can’t access your website, they are more likely to check out your competitors. A load balancer would help to minimize this risk.

How to implement load balancing at your company

Executing a good load balancing scheme can be accomplished by using hardware or by employing software solutions on a virtual server. There are many professional all-in-one software solutions available on the market. Both hosted formats, i.e. infrastructure as a service (IaaS), and network components for on-site IT infrastructure are available for purchase. Given the premium price that proprietary load balancers are generally associated with, many smaller businesses often opt to use open source solutions, like Linux Virtual Server. By efficiently distributing workloads throughout the server networks, affordable options such as these ensure that business websites or other web projects remain available. The web hosting sector furthermore offers load balancing as an additional feature for cloud computing.

You want your own vServer? IONOS offers powerful and cost-effective VPS packages. Take advantage of the IONOS free VPS trial now and test your vServer for free for 30 days.