SEO ranking factors 2016: the Searchmetrics study at a glance

Searchmetrics has been providing an annual, in-depth analysis of search engine rankings since 2012. However, the ranking factors specifically tailored to the market leader, Google, appeared in this form for the last time at the end of 2016. Google explains the era of general SEO checklists is over and has begun to use a more sophisticated approach to search engine optimization. In the future, the company’s annual SEO report will include sector-specific analyses. One of the reasons for this is Google’s AI system, RankBrain, which is much more flexible than traditional search algorithms thanks to machine learning. In June 2016, Google employee Jeff Dean disclosed to technical editor Steven Levy that RankBrain would be involved in the processing of all search engine requests. According to Levy’s report on Backchannel, the AI system is one of the three most important factors in search engine rankings. With the current study 'Ranking factors – rebooting for relevance', Searchmetrics presents a catalog of factors that decide where a website places in the search engine results. The results of the investigation are primarily intended as guidelines for individual, sector-specific analyses. As in previous years, the study is based on a set of 10,000 search engine-related keywords. Current findings were interpreted with a view to previous investigations. We have summarized the most important results from the Searchmetrics study.

Content factors

Strengthened by the recent changes to the Google core algorithm, one thing is certain for Searchmetrics: a website’s content has to be the focus when optimizing for the search engine. One main factor here is content relevance. This only became a ranking factor in 2016. Optimizing individual keywords, on the other hand, is losing significance in favor of holistic text design.

Content relevance is becoming the main ranking factor

Good SEO content is characterized by how much it corresponds to what the user is looking for. However, this differs from search query to search query. The challenge that content marketers face is that they have to answer as many questions as possible with just one text. To achieve this, content is created based on holistic texts that take into account different aspects of a topic and are optimized for different keywords on a semantically related topic. Holistic content therefore aims to achieve good search results for several relevant keywords. The individual keyword, however, fades into the background. The search metrics study analyzed the content relevance of texts in relation to the search term used. This was carried out on the basis of linguistic corpora and on the concept of semantic relationships. The result isn’t surprising: 'The URLs with the highest content relevance are those in position 3 to 6' The relevance score decreases constantly for subsequent search results. Positions 1 and 2 need to be considered separately in this study. According to Searchmetrics, there are generally websites of well-known brands, which benefit from factors such as recognizability, user trust, and brand image when it comes to the Google ranking, so their position isn’t necessarily due to the content being relevant.

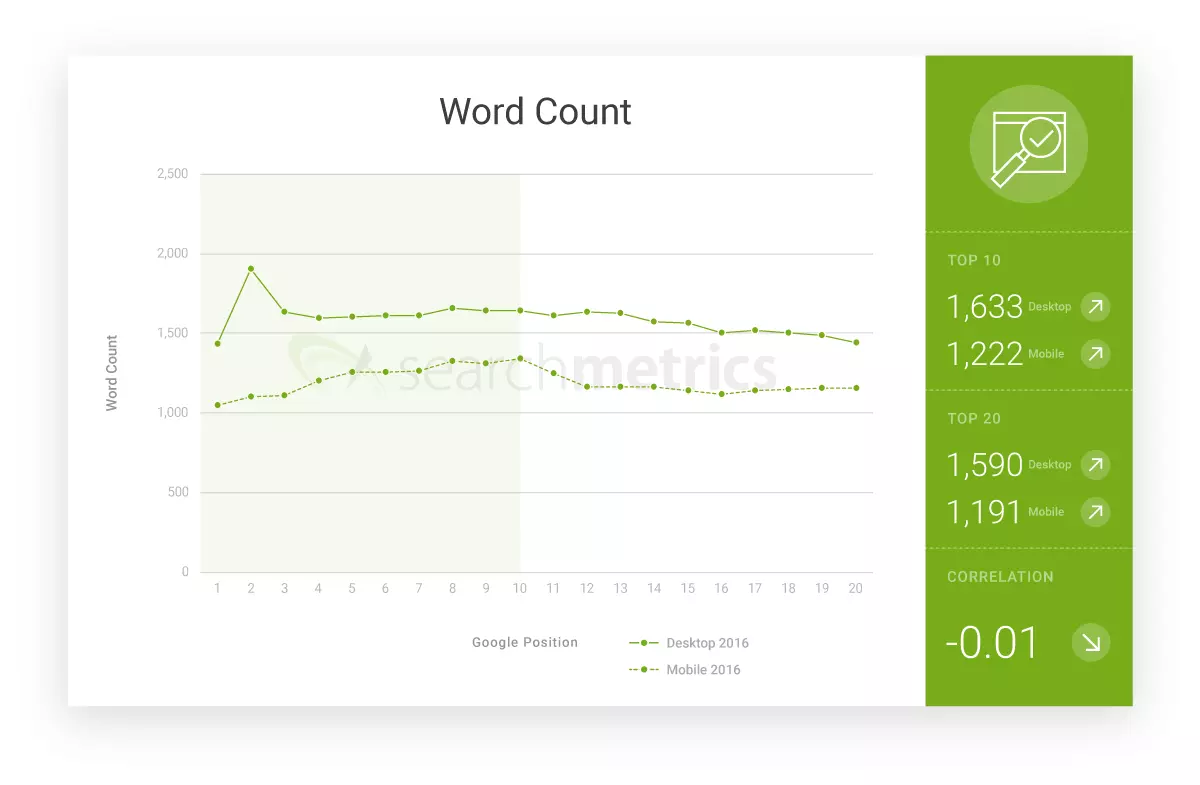

The average number of words is increasing

The average word count of well-ranking landing pages has been steadily increasing for years. According to Searchmetrics, this reflects a more intensive analysis of the respective topic areas in terms of holistic content creation.

'The average number of words increased by 50 percent in 2016'

Significant differences in the word count are shown here with the comparison of desktop content and mobile landing pages: according to the analysis, desktop versions of a website are on average 30 percent longer than their mobile counterparts.

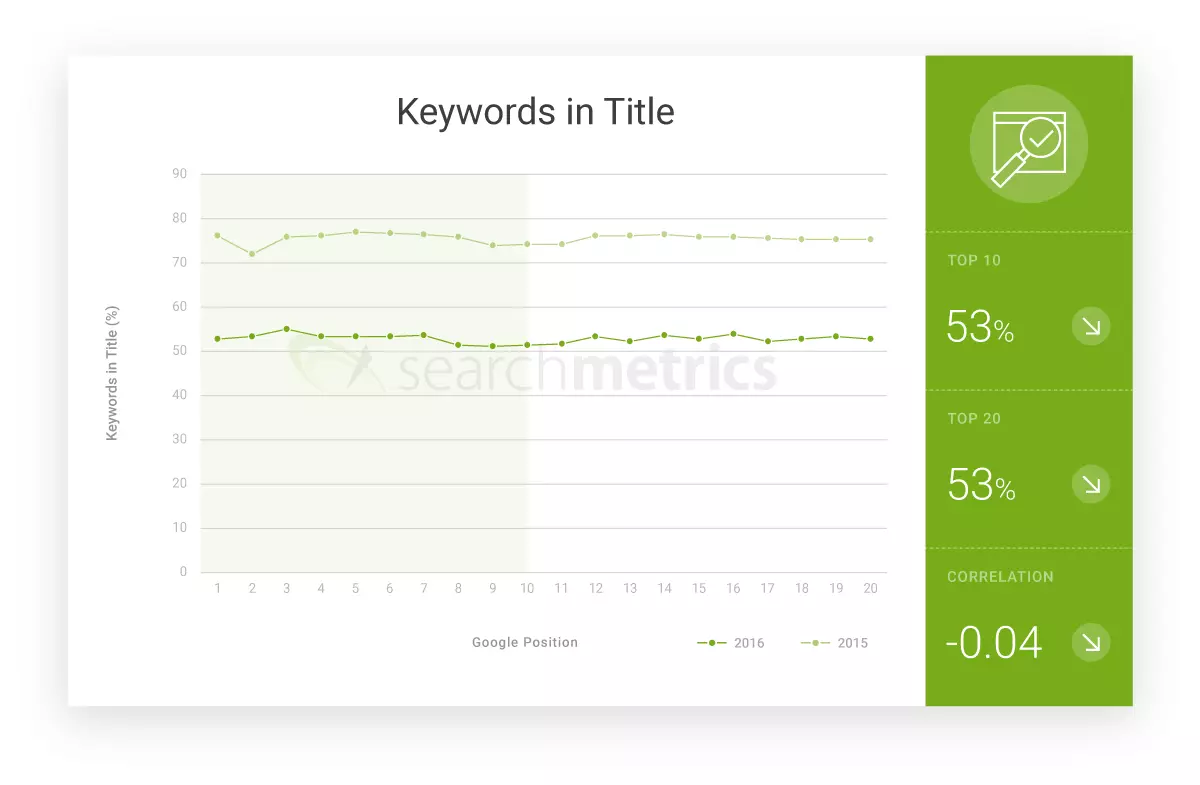

The keyword in the title is losing relevance

This motto is still valid in the world of SEO: the keyword needs to be in the title - and placed as far forward as possible. Whether the search engine agrees with this assumption is shown by the findings of one of the Searchmetrics studies:

'In 2016 only 45 percent of the top 20 URLs had the keyword in the title'

This development can also be explained by the holistic approach of content creation, in which texts are optimized on topics rather than keywords. Google’s AI system is now capable of analyzing semantic relationships without the need for keywords.

Similarly, this development can be seen by looking at the headlines and descriptions. According to Searchmetrics, only one-third of all the top 20 landing pages have the keyword in the main heading (H1).

User signals

In order to determine whether Google users are satisfied with the proposed URLs, the web operator doesn’t have to solely rely on indirect factors such as the semantic analysis of website content. In addition to the search engine, Google products such as the Chrome web browser, the web analytics plugin Analytics, the advertising system AdWords, and the mobile operating system Android, also provide detailed information on users’ online behavior.

Google can find out whether a website offers what it promises by analyzing detailed user signals such as clicks and bounce rates as well as the average retention time combined with a huge database. The Searchmetrics study provides helpful input for website operators and SEO consultants.

The average click-through rate of positions 1 to 3 is 36 percent

Users put a lot of trust into the search engine’s relevance analysis. This was already indicated in the 2014 Searchmetrics study when the average click-through rate (CTR) of the analyzed top URLs was determined.

Consequently, websites on position 1 receive the most clicks. For pole position, the Searchmetrics team calculated an average CTR of 44%. The percentage decreases the further down the page the website is. At position 3 the CTR is already down to 29%.

'The average click-through rate of position 1 to 3 is 36 percent.'

There’s a clear increase in the CTR in position 11. The first website on the second search results page actually receives more clicks than the websites positioned at the bottom on the first search results page.

Compared to 2014, the average CTR of the top 10 URLs in the Google ranking has risen significantly.

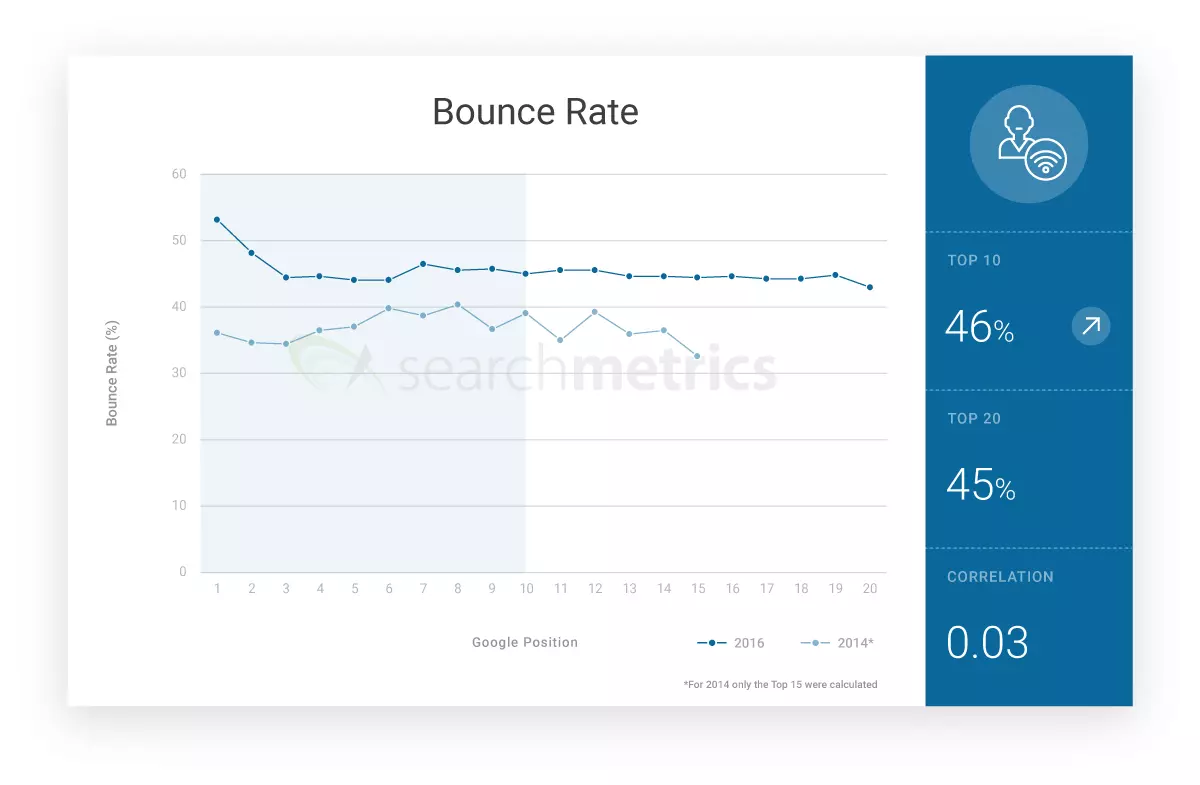

The bounce rate on the first search results page has increased to about 40 percent

In addition to the CTR, the so-called bounce rate is also taken into account when conducting a website relevance assessment. This shows how many users return to Google.com after clicking on a search result without looking at other URLs on the domain they’re visiting.

For the investigated URLs, the Searchmetrics team found an average of 40% increase in the bounce rate for the top 10 positions compared to 2014. There’s a clear deviation between the top two positions.

According to analysts, however, a website’s relevance can only be partly determined by the bounce rate. Users have different reasons for leaving a website and it could also be that they leave quickly even after finding what they were looking for. This is possible, for example, with quick search queries, which can easily be answered by looking through a glossary or dictionary.

One explanation for the detected increase in the average bounce rate could be the high quality of Google’s algorithm, according to the Searchmetrics team. Thanks to the new AI system, the search engine is now able to display the URL that best fits the user’s needs. This means that fewer URLs need to be clicked on to find the desired information.

The average retention time is increasing

What’s especially important for a relevant assessment of a website is mainly the time between clicking and leaving the site. Webanalytics can accurately measure the time a user spends on the website (time on site). Here, Searchmetrics provides us with an average value for which there could be different reasons.

'The time on site for the top 10 URLs is 3 minutes and 43 seconds.'

Comparing the value of the current study with that of 2014, a significant increase in the retention time can be seen for the top 10 URLs. This development can be attributed to the fact that website operators have started providing visitors with better quality content since they want to rank well with Google.

Although a high retention time can be due to high-quality content, this does not mean that a short stay necessarily means that you website is of low quality. For example, a user searching for a weather report or the results from last weekend’s soccer match won’t need to spend ages on the website.

Technical factors and usability

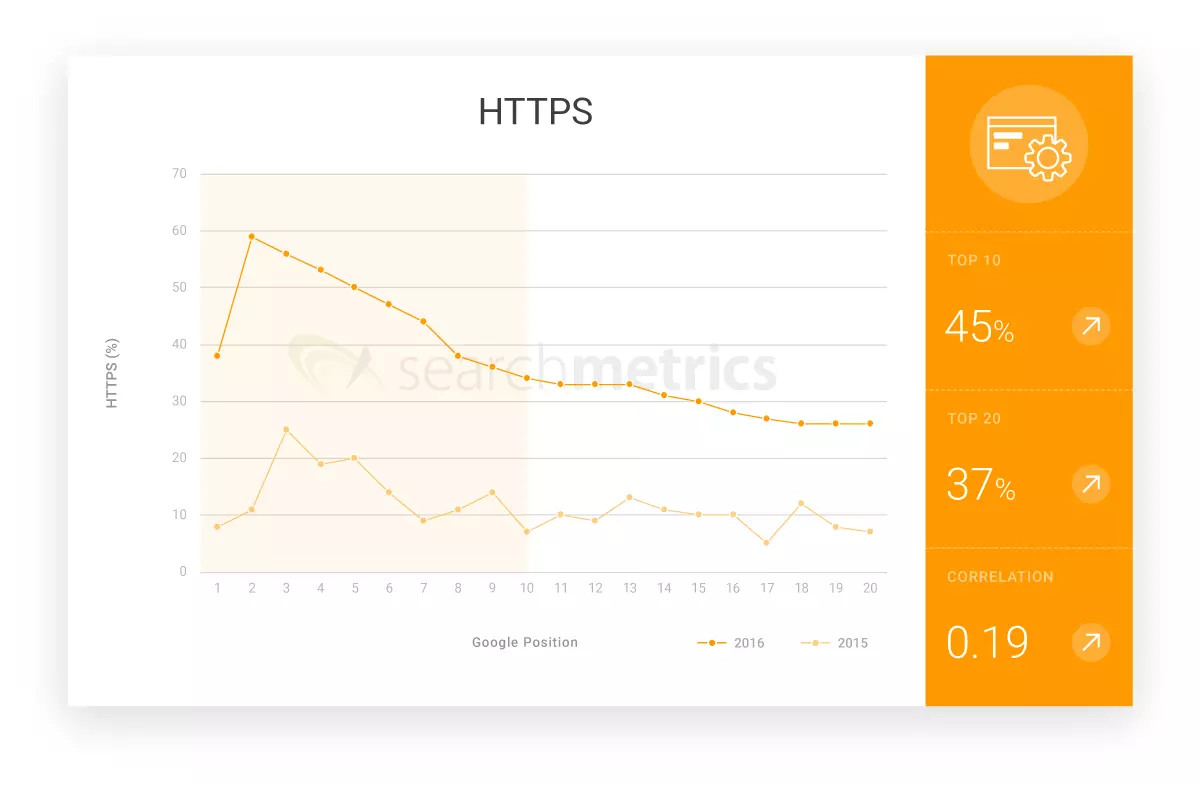

Creating high-quality valuable content is just one part of search engine optimization. Even the best content will not reach the top positions in the search results if the basic technical requirements are missing. Topical websites must be analyzed for factors such as performance, security, and usability. In terms of technical provisions regarding web content, two main SEO trends stood out in 2016. On top of general factors such as page loading time and file size, more and more website operators are striving to make mobile content available to their visitors. In addition, Searchmetrics stresses the importance of transport encryption via HTTPS for the search engine ranking.

HTTPS has become a necessity in 2017

Webmasters planning to forego HTTPS transport encryption in 2017 will find it hard to improve their position in the search results. Even in September 2016, Google announced that it planned to classify HTTP sites as 'unsafe' from 2017 if sensitive data is processed on them. You don’t have to be an SEO expert to figure out how much this will affect a user’s willingness to click on a website. Not least for this reason, the number of HTTPS-encrypted websites is growing steadily. While the number of top 10 websites based on HTTPS was still 14% the previous year, Searchmetrics was able to record a significant increase to 33% in its current study. 'One third of websites in the top 10 are now based on HTTPS encryption.'

Mobile-friendliness is a prerequisite for a good ranking

Search queries from mobile devices are constantly increasing. According to a Google report from 2015, the search engine market leader has more search queries coming from mobile devices than desktop computers in the USA and Japan. Google’s Mobile First Strategy shows what a different mobile friendliness makes on the search engine ranking. In October 2016, the company announced it would use the mobile index as a main index for web search in the future. It is therefore assumed that this will also affect the ranking for desktop web search. Webmasters who are still neglecting their mobile users should consider redesigning their website for 2017. Mobile sub domains, responsive web design, and dynamic content are all good ways of making your site more mobile-friendly.

Social signals

As in 2014, the 2016 Searchmetrics study continued to show a strong correlation between a top rating in the search engine and so-called social signals. This refers to communicative signals such as shares, likes, and comments on social networks like Facebook, Twitter, Pinterest, and Google+. Analysts still find it difficult to associate a good Google ranking with social media presence.

Backlinks

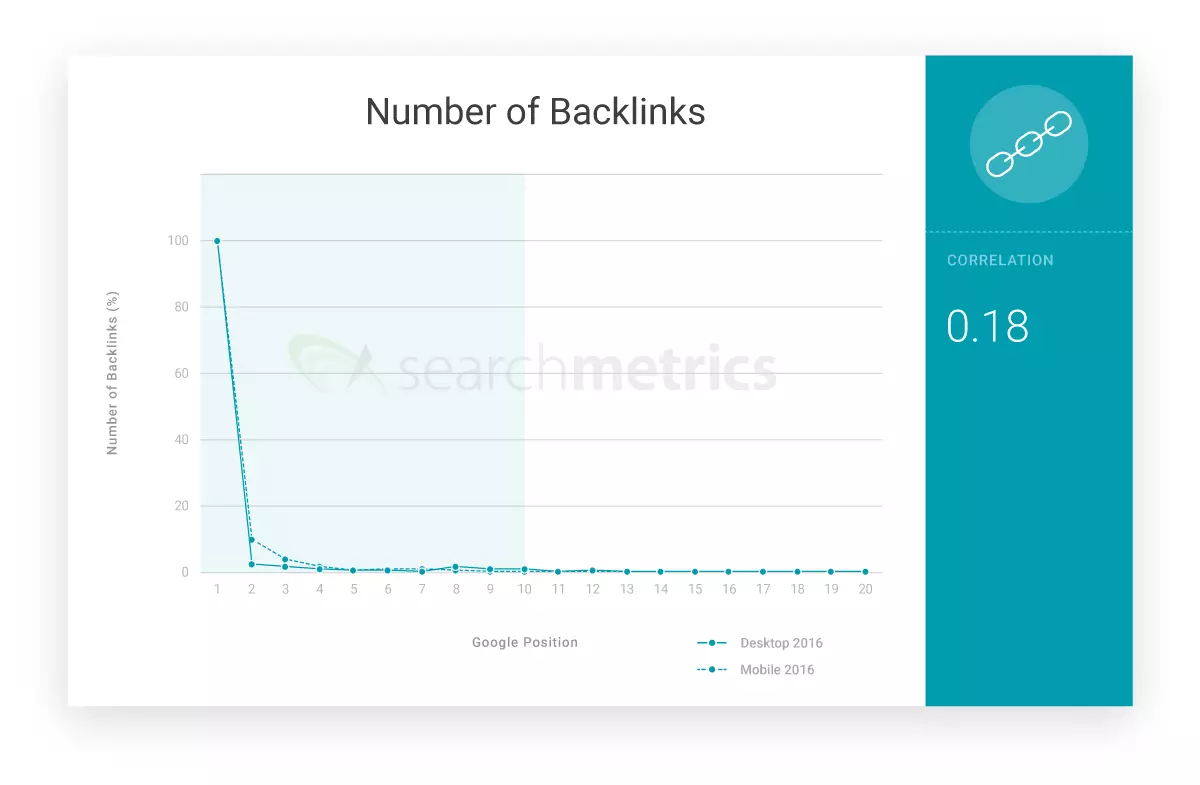

The number of backlinks on a website still correlates somewhat with its placement in the search results. However, link building is fading into the background as a result of new developments in search engine optimization. According to Searchmetrics, a website’s backlink profile is no longer the decisive factor for impressing Google, but rather one of many.

Today, Google is able to analyze a website’s value through semantic context and direct user signals. The backlink profile as a quality feature has been replaced by content relevance. If there are numerous inbound links from representative websites, webmasters need to ask themselves whether the users’ expectations are met by the content these websites offer.

You shouldn’t fear backlinks completely disappearing from the Google algorithm in the future. This was also confirmed by the Searchmetrics study. However, backlink-oriented search engine optimization is now outdated.

'The correlations for backlinks are still high, but their importance for the ranking will continue to decrease.'

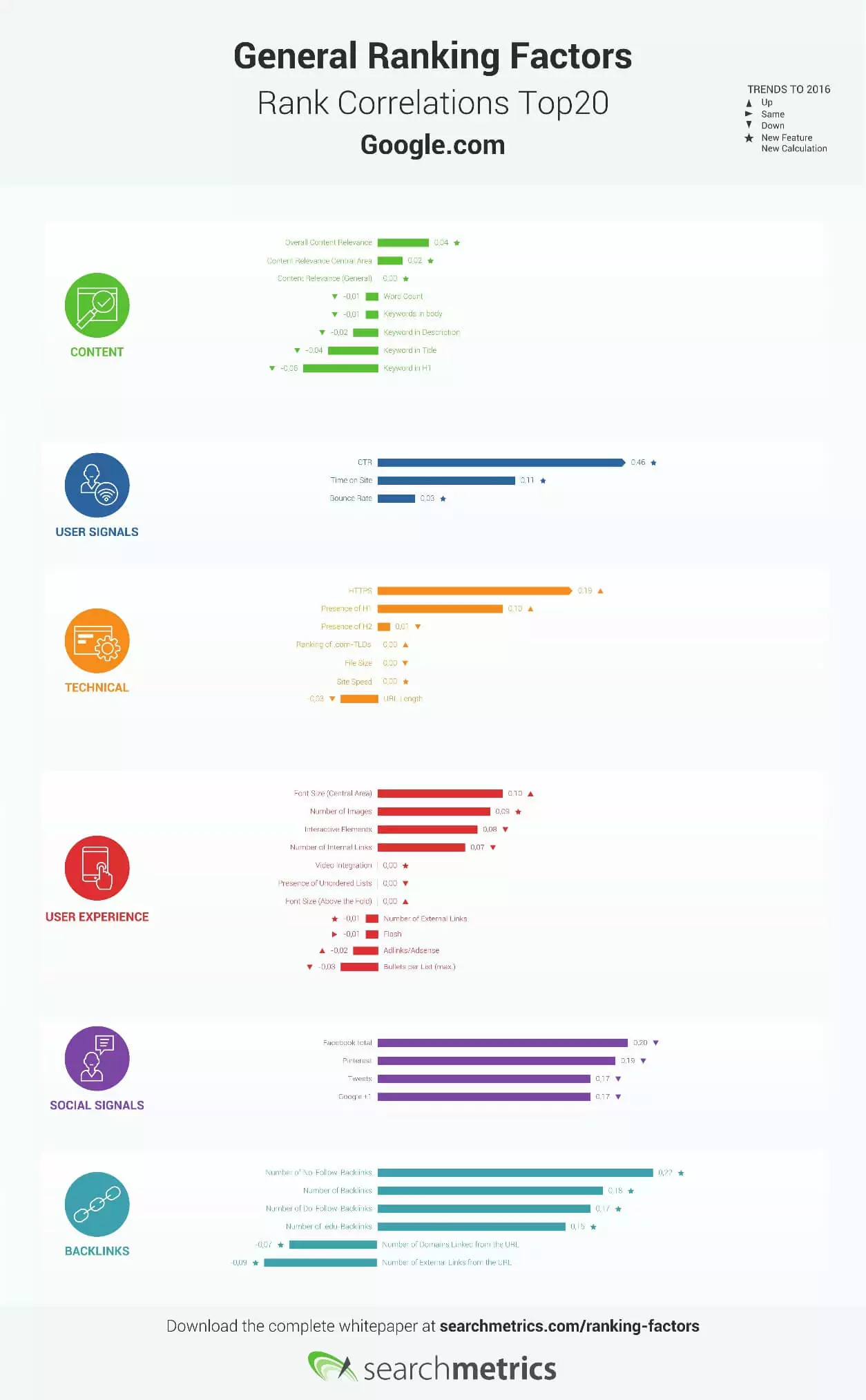

Ranking factors in correlation to the search engine position

The following graphic shows the ranking correlation of general ranking factors for the top 20 URLs analyzed by Searchmetrics as well as any other developments compared to the previous year. Ranking factors, which were collected for the first time in 2016 as well as recalculated factors, have been marked with an asterisk.

Click here to download the infographic of the Top 20 Rank Correlations from Google.com

Conclusion: the future of search engine optimization

The Searchmetrics study 'Ranking Factors - Rebooting for Relevance' shows that there are still factors that correlate to a good Google ranking. However, these factors can no longer be transferred to almost any website. The different demands that man and machine place on websites from different sectors can’t be properly depicted with general ranking factors.

At the time the current analysis was published, the Searchmetrics team announced more sophisticated follow-up studies, which examine the needs of individual sectors separately. The annual study on ranking factors and rank correlations will also be based on sector-specific keyword sets (e.g. for e-commerce, health, finance, etc.). The 2016 Searchmetrics study can be used by webmasters as a simple SEO checklist and a way to interpret trends and predictions in the field of search engine optimization on a sector-specific basis.