Google Sheets: Using the importXML function for web scraping

One of the most important properties of Google Sheets is its ability to directly import content from websites. The importXML() function in Google Sheets reads structured website content and transfers it into the Google Sheet of your choice.

The two spreadsheet programs Google Sheets and Excel may be very similar, but they do differ on a few important points. We cover this in our comparison between Excel and Google Sheets.

The XML to Google Sheets import is extremely practical if you want to make tables out of data that has already been published online. In this article, you’ll learn how you can make the most of this option. We’ll also give you a few tips on what you can do with this helpful feature of Google Sheets.

Google Sheets: The importXML() function explained quickly

The importXML() function reads structured data from online projects and enters it in the cells of a Google Sheets spreadsheet.

You can enter the function in the Google program by typing the following:

=importXML(URL, XPath)Google Sheets importXML() has only two arguments:

- URL: The web address you want to take the data from. The easiest way to do this is to copy it directly from the address bar in your browser.

- XPath: The information about where on the page the data is that you want to import.

For detailed information about XPath, have a look at our XPath tutorial.

If you type the arguments directly into the function, you must put them between quotation marks. It’s much simpler to write both arguments (without the quotation marks) into two cells in the sheet and then reference them in the formula. This way, you can save yourself the quotation marks.

Ways you can use Google Sheets importXML()

importXML() is very versatile. Its following four uses are particularly helpful:

- Creating lists of links

- Analyzing structured website data

- Extracting text

- Transferring HTML tables

In the following sections, we will take these four examples to explain to you in concrete terms how you can use importXML in Google Sheets.

Creating lists of links

Sometimes, you might want to organize interesting web addresses into an easy list with their URL, name, and a helpful brief description. The website Nodesign serves as a good basis for this use case. This collection of links presents graphical tools for developers with little to no design training. The links are displayed in boxes containing a picture, a title, and a short description. There are currently over 140 entries in the collection.

We would like to create an easy-to-read list of links from this in Google Sheets using importXML(). This is how we do it:

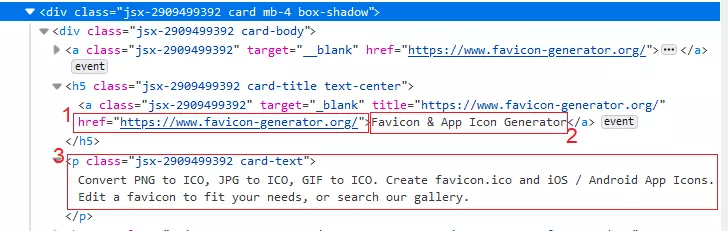

- Find the content in the source code of the web page

In this case, we need the URL, the anchor text (i.e., the text of the link) and the short description. To do this, we go to developer tools in the browser (by pressing F12 or right-clicking and choosing “Inspect”) and select the first tile. The data we want is under the <h5> tag: the URL (1), the anchor text of the link (2) and the short description (3).

- Define the XPath

The next step is to define the XPath for all three pieces of information:

URL: The URL is an “attribute” of the <a> tag, which is under the <h5> tag. The XPath is as follows:

//h5/a/@hrefAnchor text: The anchor text is the content of the <a> tag we just talked about: //h5/a. You can just take the XPath from above and remove the attribute @href.

Short description: This XPath is a little trickier, as it isn’t on the same hierarchy level as the <a> tag. If we simply took the section tagged with <p> as the XPath, the texts would no longer match up to their URLs. We therefore need to define the section containing the short description as the element that comes after the <h5> tag on the same hierarchy level:

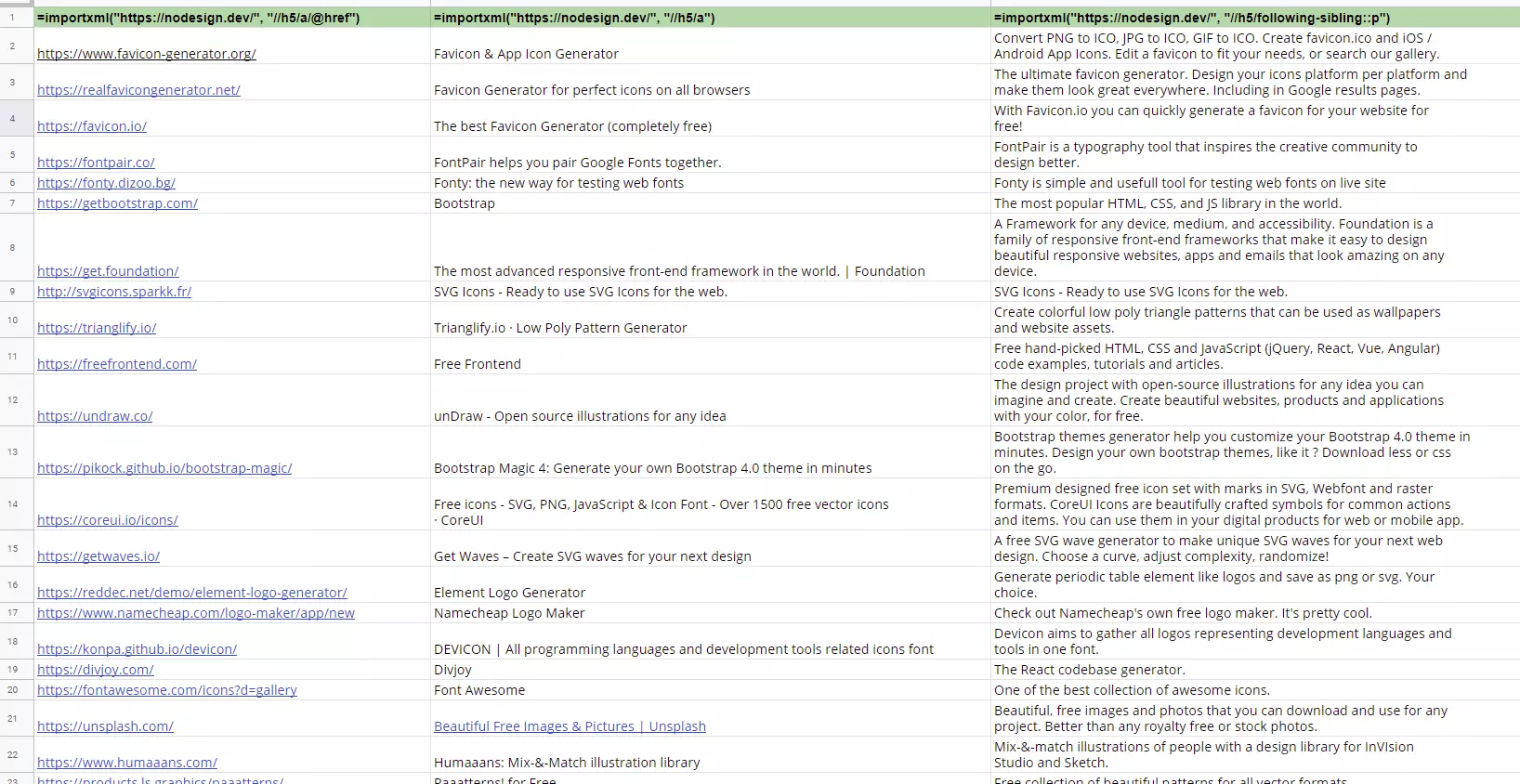

//h5/following-sibling::p- Using the function in the Google Sheet

Now, we will write an importXML() instruction in a column for each of the three pieces of data. We only need to do this on the first row, as the program will automatically fill in the rows below. The formula will also overwrite its own content by itself.

To keep things visible, we’ll add an empty row above and paste the function there again for information – only this time, we’ll put an apostrophe at the beginning, so that the formula is displayed as pure text instead of being run.

You can keep working on this table as usual – the individual cells contain the actual data itself and not the formula.

- Store, share, and edit data easily

- Backed up and highly secure

- Sync with all devices

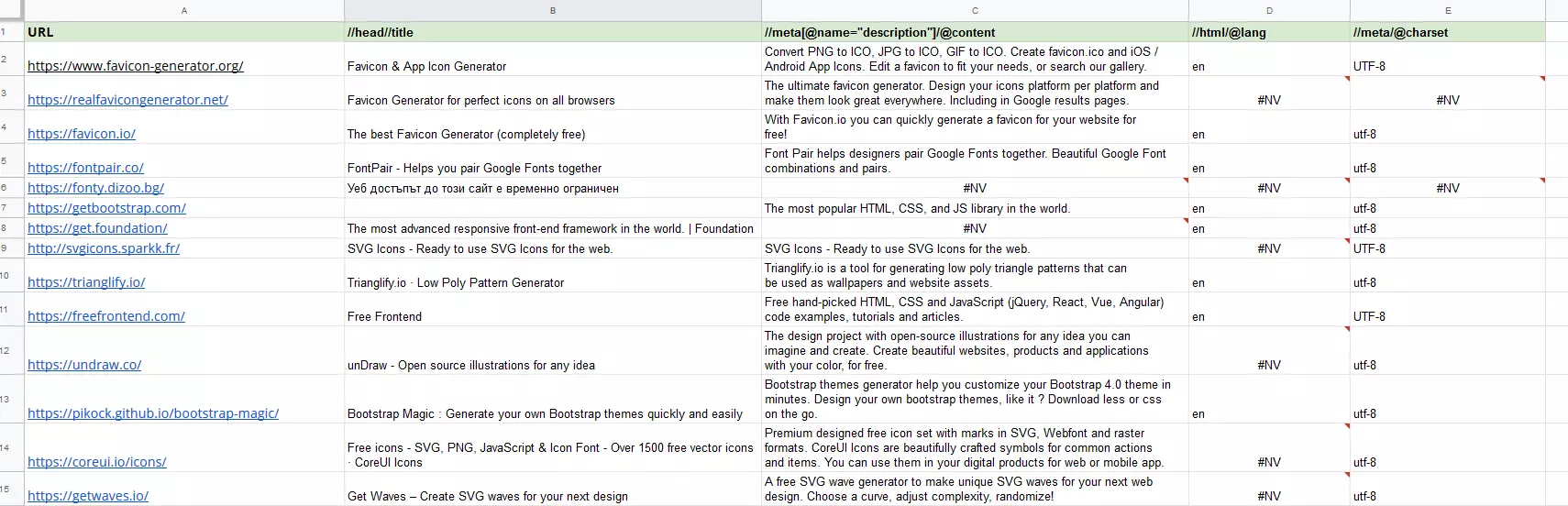

Analyzing structured website data

The list of links we made can now be analyzed in different ways depending on our needs. For example, you could list the meta title, meta description, language and encoding for all the URLs it found – this information is relevant for SEO purposes.

To do this, we should write the XPath data in the top row and create our function in the second row (example: second column):

=importXML($A2,B$1)The formula takes the URL from the first column and the XPath from the top row. So that we can drag the formula down and to the right, we must set the first column and row as an absolute reference (using the $ symbol).

#N/A results mean that the data could not be found on the web page.

To separate the table from the linked website, we can highlight it, copy it, and press Shift + Ctrl + V to paste it into a new spreadsheet.

Extracting text

Thanks to advances in text recognition using artificial intelligence, also known as natural language processing, there is an increasing need to extract large quantities of text from websites for analytical purposes.

Good examples of this are newspapers, news aggregators or RSS and press overview sites. These provide valuable information for analyzing trends.

For our use demonstration, we chose the news review site newstral.com. We simply want to extract a list of all headlines. We can do this as follows:

- Find the content in the source code of the web page

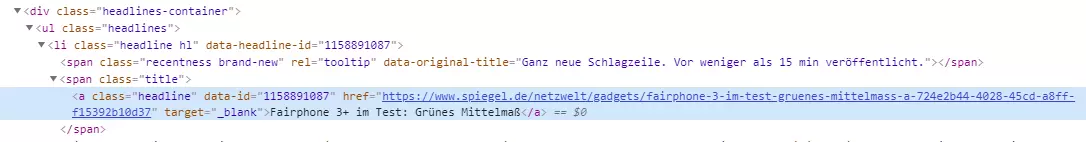

In developer tools (F12 key or right-clicking and selecting “Inspect”), we first select a headline and look at how it is structured. In this case, the structure isn’t at all complicated – the headline is the anchor text of the link (bottom line).

- Define the XPath

However, if we want to get only the headlines and not all other links on the homepage, we need to be a little more precise when writing the XPath. We only want to find <a> tags in the “headline” class, which are those that are found in the class section “headlines-container”.

//div[@class="headlines-container"]/ul/li/span/a[@class="headline"]- Using the function in the Google Sheet

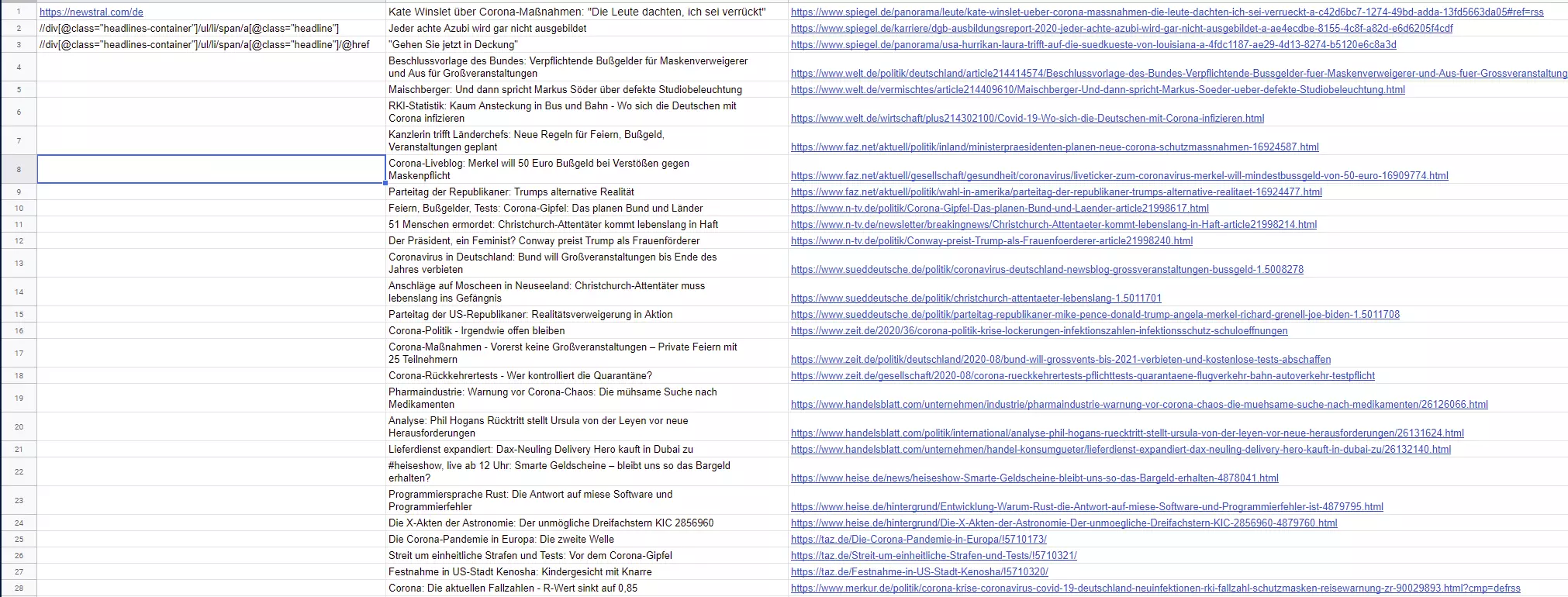

We put the URL and XPath into the first column of a new Google Sheet. From here, we can easily grab them to write the function:

(Cell B1) =importXML(A1,A2)

(Cell B2) =importXML(A1,A3)The result is a list of headlines. The best part? You can update this list at any time by pressing F5 so that you’re always seeing the latest content.

Would you like to learn more tricks for Google Sheets? Here, you can read about how to use Google Sheets drop-down lists.

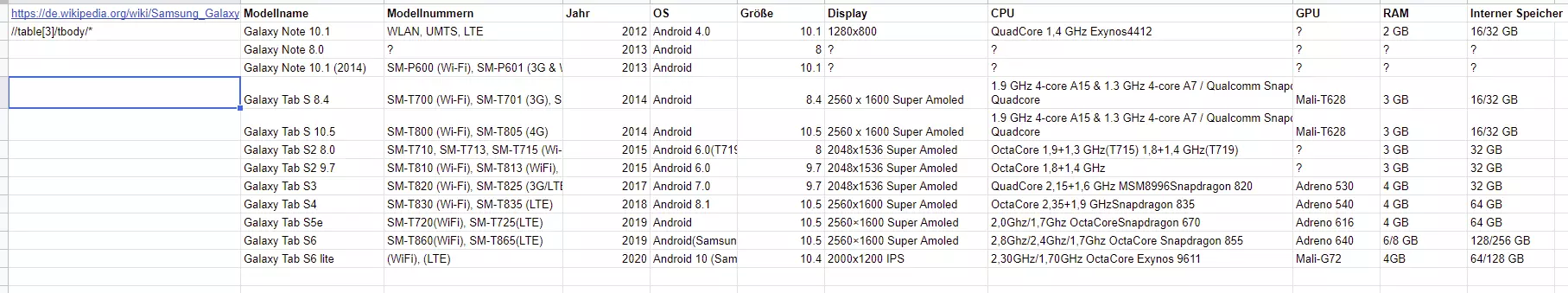

Transferring HTML tables

One single formula is enough to be able to transfer a table from a website into Google Sheets. As an example, let’s take a table in a Wikipedia article about Samsung Galaxy models. To import this table, we simply need the <table> tag in the XPath. However, we first need to find out how many <table> tags there are before the one we want. Our table is the third one on the page. The formula is therefore as follows:

=importXML(“https://en.wikipedia.org/wiki/Samsung_Galaxy”, “//table[3]/tbody/*”)Voilà! With just one formula in cell B1, we were able to display the whole table with all its rows and columns in our spreadsheet.

You’ve seen it for yourself – the XML to Google Sheets import is incredibly versatile and will save you a ton of time and work.

Many social media platforms and large online stores such as Amazon prohibit web scraping. Read the user conditions before you try out your newly acquired web scraping skills on these platforms.